Overview:

Decision Tree Regressor is a powerful machine learning algorithm used for regression tasks, providing clear interpretability and the ability to model complex relationships. OtasML offers various configuration options to customize decision tree regressors for optimal performance.

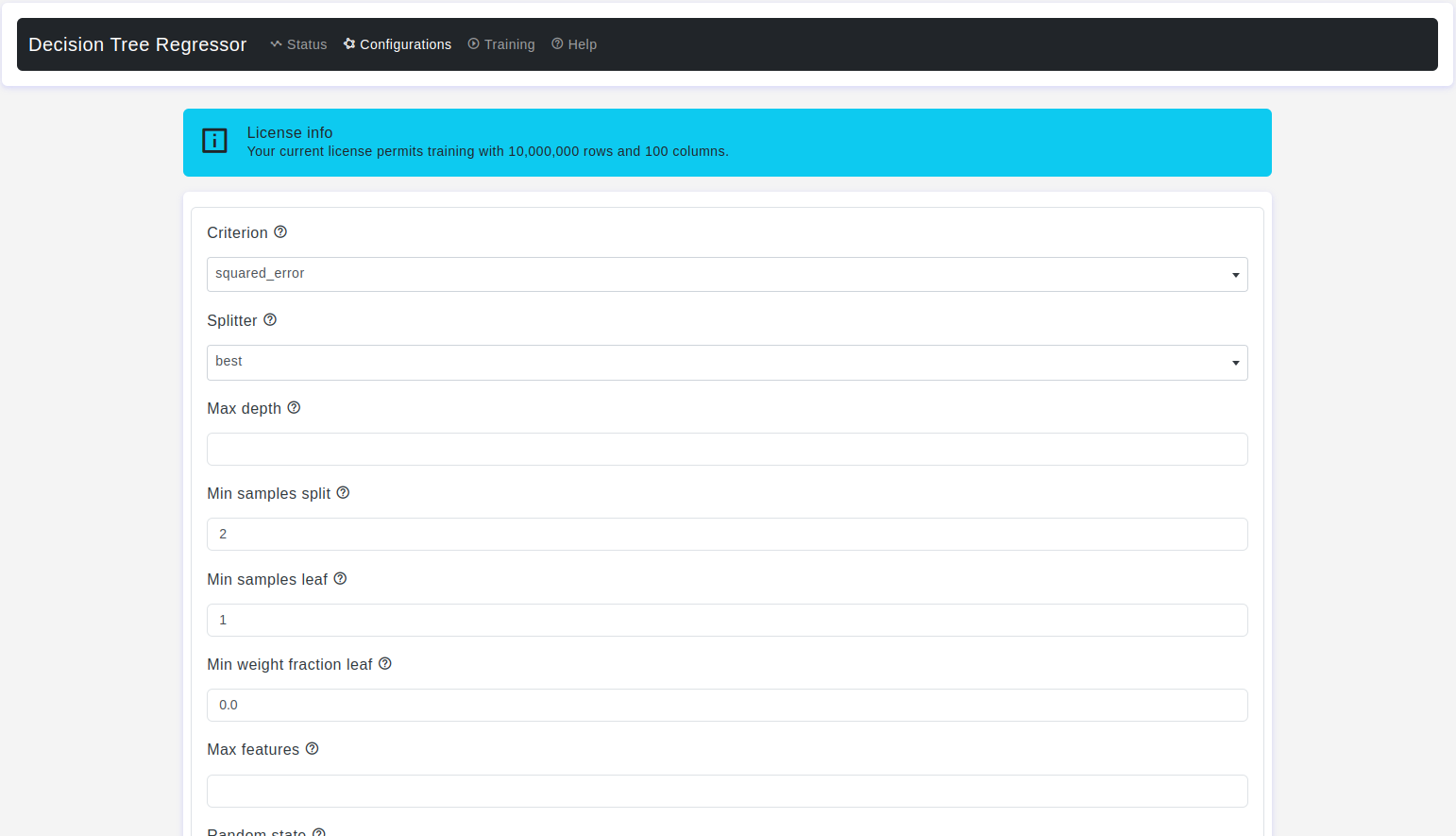

Configurations page:

The Configurations page allows users to adjust various parameters of the decision tree regressor model. Here are the details:

Criterion

- Default Value: squared_error

- Description: It is a parameter is used to specify the criterion for measuring the quality of a split when constructing the decision tree. The criterion parameter affects how the algorithm decides where and how to split the data at each node of the tree.

- Squared Error (

squared_errorormse): This is the Mean Squared Error criterion, which measures the quality of a split by calculating the mean squared error of the target values in each node. - Friedman Mean Squared Error (

friedman_mse): This criterion is based on the Friedman's improvement score and is used for regression problems. It aims to minimize the mean squared error with improvements based on mean values of the target variable. - Mean Absolute Error (

absolute_errorormae): This is the Mean Absolute Error criterion, which measures the quality of a split by calculating the mean absolute difference between the actual target values and the predicted values in each node. - Poisson Deviance (

poisson): This criterion is specific to Poisson regression, a type of regression used when modeling count data. It measures the quality of a split based on Poisson deviance, which is a measure of how well the model fits the Poisson distribution.

- Squared Error (

Splitter

- Default Value: best

- Description: It is a parameter is used to specify the strategy used for selecting the feature to split on at each node of the decision tree. It controls how the decision tree algorithm decides which feature to use for making splits

best: This is the default value for splitter. It selects the best feature to split on at each node based on a criterion such as MSE (Mean Squared Error), MAE (Mean Absolute Error), or another criterion specified by the criterion parameter.Bestmeans that the algorithm evaluates all possible splits for each feature and selects the one that results in the best improvement according to the chosen criterion. This can be computationally expensive, especially for datasets with many features.random: When splitter is set torandom, the algorithm chooses a random subset of features and evaluates splits based on that subset. This strategy introduces randomness into the decision tree construction process. It can help prevent overfitting by adding diversity to the trees and reducing the complexity of the model. The number of features considered at each split is controlled by themax_featuresparameter.

Max Depth

- Default Value: None

- Description: It is a parameter is used to control the maximum depth or maximum number of levels in the decision tree. It determines how deep the tree can grow during the training process. Limiting the tree's depth is a way to prevent it from becoming overly complex and potentially overfitting the training data.

Min Samples Split

- Default Value: 2

- Description: It is a parameter is used to specify the minimum number of samples required to split an internal node during the construction of the decision tree. It controls when the algorithm should stop splitting nodes because a node with fewer samples than

min_samples_splitcannot be further subdivided.

Min Samples Leaf

- Default Value: 1

- Description: It is a parameter is used to specify the minimum number of samples required to be in a leaf node (a terminal node) of the decision tree. A leaf node is a node that does not split further, and it represents a prediction value for the target variable.

Min Weight Fraction Leaf

- Default Value: 0.0

- Description: It is a parameter is used to specify the minimum weighted fraction of the total sum of weights (of all the input samples) required to be in a leaf node. This parameter allows you to control the minimum amount of data, taking into account sample weights, that should be present in a leaf node.

Max Features

- Default Value: None

- Description: It is a parameter controls the maximum number of features that are considered when making a split decision at each node in the decision tree. It influences the randomization and feature selection process during tree construction.

Random State

- Default Value: None

- Description: It is used to control the randomness during the construction of the decision tree. It determines the random seed for the random number generator, which in turn influences certain aspects of the tree's construction.

Max Leaf Nodes

- Default Value: None

- Description: It is a parameter used to control the maximum number of leaf nodes in the decision tree. A leaf node is a terminal node that does not split further and represents a prediction value for the target variable. Setting

max_leaf_nodeslimits the number of leaf nodes in the tree.

Min Impurity Decrease

- Default Value: 0.0

- Description: It is used to control when a split is allowed during the construction of the decision tree. It specifies the minimum amount by which impurity (typically measured using the Gini impurity or mean squared error) must decrease for a split to be considered valid.

CCP Alpha

- Default Value: 0.0

- Description: It is used for Minimal Cost-Complexity Pruning. Pruning is a technique used to prevent overfitting by simplifying a decision tree. The

ccp_alphaparameter determines the complexity penalty that is applied during pruning. - Warning: Values must be non-negative

Test Size

- Default Value: 0.2

- Description: The

test sizeparameter is used when splitting the dataset into these subsets, and it specifies the portion of the data that will be used for testing.

Train Size

- Default Value: 0.8

- Description: The

train sizeparameter is used when splitting the dataset into these subsets, and it specifies the portion of the data that will be used for model training.

Conclusion

OtasML’s configuration options for decision tree regressor models allow users to tailor their models to specific datasets and prediction tasks. Adjusting parameters such as criterion, splitter, and max depth can significantly impact the model’s performance and behavior. Use this guide to fine-tune your decision tree regressor models and achieve superior predictive accuracy with OtasML.