Overview:

Random Forest is a versatile and robust machine-learning algorithm used for classification tasks. OtasML provides extensive parameters to fine-tune Random Forest classifiers for optimal performance. Below is a comprehensive guide to these parameters.

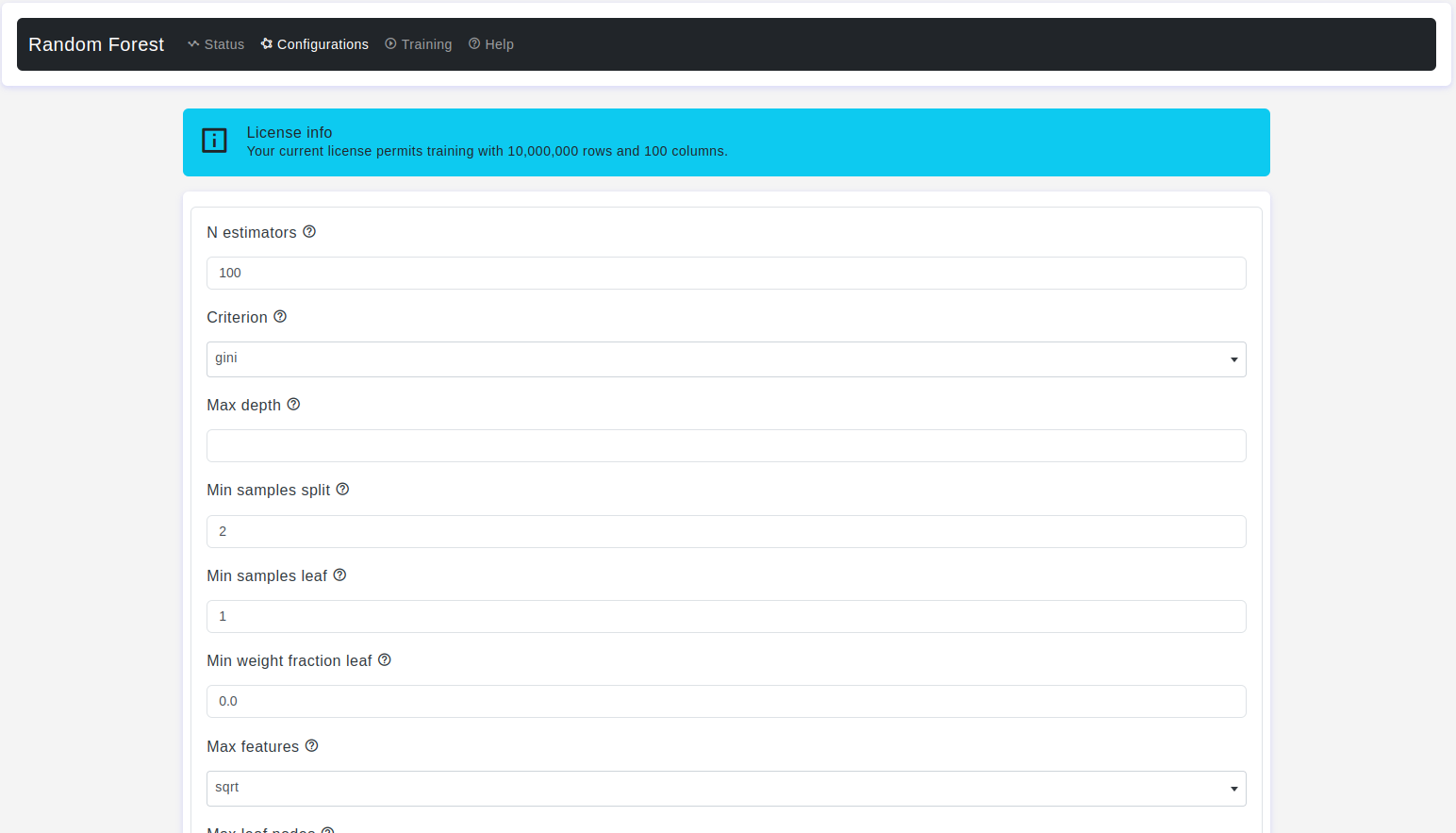

Configurations page:

The Configurations page allows users to adjust various parameters of the Random Forest classifier. Here are the details:

N estimators

- Default Value: 100

- Description: The number of trees in the forest. Increasing the number of trees generally improves model performance but also increases computation time.

Criterion

- Default Value: gini

- Description: It is used to specify the criterion for measuring the quality of a split when building decision trees within the

Random Forestensemble for classification tasks. The criterion determines how the algorithm decides where to split the data at each node of the decision tree.gini: This criterion uses the Gini impurity as a measure of the quality of a split. The Gini impurity quantifies the probability of misclassifying a randomly chosen element from the set. A lower Gini impurity indicates a better split.entropy: This criterion uses the entropy of the class labels at a node as a measure of impurity. Entropy measures the level of disorder or randomness in a set of labels. Similar to the Gini impurity, the decision tree algorithm will aim to minimize entropy when selecting the best split. In some cases, using entropy may lead to slightly different splits compared to Gini impurity.Log loss: is a different metric used to evaluate the performance of classification models, It's not a criterion used for splitting nodes in the decision trees, but rather a way to measure the quality of the final predictions made by the model.

- Warning: This parameter is tree-specific.

Max depth

- Default Value: None

- Description: The maximum depth of the tree. If None, then nodes are expanded until all leaves are pure or until all leaves contain less than

min_samples_splitsamples.

Min samples split

- Default Value: 2

- Description: It determines the minimum number of samples required to split an internal node of a decision tree within the Random Forest ensemble.

Min samples leaf

- Default Value: 1

- Description: It determines the minimum number of samples required to be in a leaf node of a decision tree within the Random Forest ensemble.

Min weight fraction leaf

- Default Value: 0.0

- Description: This parameter is used to specify the minimum weighted fraction of the sum total of weights (of all the input samples) required to be present in a leaf node of a decision tree within the Random Forest ensemble.

Max features

- Default Value: sqrt

- Description: It controls the maximum number of features that are considered when making a split in each decision tree within the Random Forest ensemble for classification tasks. It essentially limits the subset of features that can be used to determine the best split at each node.

sqrt: It setsmax_featuresto the square root of the total number of features. This is a common default choice and generally works well for many classification tasks.log2: Itsets max_featuresto the base-2 logarithm of the total number of features.None: It setsmax_featuresto the total number of features. In other words, all features are considered when making splits.

Max leaf nodes

- Default Value: None

- Description: Grow trees with max_leaf_nodes in best-first fashion. Best nodes are defined as relative reduction in impurity. If None then an unlimited number of leaf nodes.

Min impurity decrease

- Default Value: 0.0

- Description: It determines the minimum amount by which the impurity of a split must decrease for that split to be considered during the construction of each tree in the Random Forest. Impurity is a measure of the disorder or uncertainty in a set of data. In decision trees and Random Forests, impurity is commonly measured using metrics like Gini impurity or entropy for classification tasks. When building a tree, the algorithm considers splitting a node into child nodes, and it calculates the impurity of each potential split. If the impurity decrease achieved by a split is greater than or equal to the

min_impurity_decreasethreshold, the split is allowed; otherwise, it is not considered, and the algorithm stops splitting the node.

Bootstrap

- Default Value: True

- Description: It refers to the sampling technique used to create multiple subsets (also known as

bagsorbootstrapped samples) from the original training dataset. This technique is used to build individual decision trees within the ensemble. - Warning: Whether bootstrap samples are used when building trees. If False, the whole dataset is used to build each tree.

OOB score

- Default Value: False

- Description: The

oob_score(Out-of-Bag score) is a useful feature, that helps estimate the model's performance without the need for a separate validation set or cross-validation. It provides an out-of-sample evaluation of the Random Forest's accuracy by using the data points that were not included in thebootstrapsample for training each tree in the forest. - Warning: Only available if

bootstrap=True.

N jobs

- Default Value: None

- Description: This parameter is used to specify the number of CPU cores or threads to be used for parallel processing when training a Random Forest classifier. This parameter allows you to take advantage of multi-core processors to speed up the training process, especially for large datasets and a large number of trees in the forest.

- Warning:

-1means using all processors.

Random State

- Default Value: None

- Description: This parameter is used to control the randomness involved in the construction of the Random Forest classifier. It allows you to set a specific seed value, which ensures that the Random Forest's behavior is reproducible. This parameter is essential for achieving consistent and comparable results when you want to replicate an experiment or analysis.

Verbose

- Default Value: 0

- Description: This parameter is used to control the amount of information or logging that is displayed during the training process of a Random Forest classifier. It allows you to adjust the verbosity level to get more or less information about what's happening while the model is being trained.

verbose=0: No output or minimal output. You won't see any progress or information during training.verbose=1: Provides some level of progress information. You may see updates on the number of trees being built and the progress of each tree.verbose>1: More detailed output. This can include additional information about the training process for each tree, such as the depth of the tree, the number of nodes, and other details.

Warm start

- Default Value: False

- Description: It used to control whether you want to reuse the previously trained trees when fitting additional trees to an existing Random Forest model. This parameter is useful when you want to incrementally train a Random Forest by adding more trees to an existing forest without starting the training from scratch.

Class weight

- Default Value: None

- Description: It is used to address class imbalance issues in the training data. Class imbalance occurs when one or more classes have significantly fewer samples than others, making it challenging for the model to learn from the minority class. By adjusting the class weights, you can give more importance to the minority class during training, which can help the model achieve better performance.

balanced: uses the values of output to automatically adjust weights inversely proportional to class frequencies in the input data.balanced_subsample: is the same asbalancedexcept that weights are computed based on thebootstrapsample for every tree grown.

- Warning: these weights will be multiplied with

sample_weightifsample_weightis specified.

CCP alpha

- Default Value: 0.0

- Description: Complexity parameter used for Minimal Cost-Complexity Pruning. The subtree with the largest cost complexity that is smaller than

CCP alphawill be chosen. - Warning: By default, no pruning is performed

Max samples

- Default Value: None

- Description: It used to control the maximum number or fraction of samples that are used to train each individual tree in the Random Forest ensemble. This parameter allows you to create subsets of your training data, which can be useful for improving the diversity and performance of the ensemble.

- Warning: If

bootstrap is True, the number of samples to draw from input to train each base estimator.

Test size

- Default Value: 0.2

- Description: The

test sizeparameter is used when splitting the dataset into these subsets, and it specifies the portion of the data that will be used for testing.

Train size

- Default Value: 0.8

- Description: The

train sizeparameter is used when splitting the dataset into these subsets, and it specifies the portion of the data that will be used for model training.

Conclusion

OtasML’s configuration options for Random Forest classifiers allow users to tailor their models to specific datasets and classification challenges. Adjusting parameters like the number of estimators, max features, and class weights can significantly impact the model’s performance. Use this guide to fine-tune your Random Forest classifier and achieve superior predictive accuracy with OtasML.