Overview:

Gradient Boosting is a powerful machine learning technique that builds predictive models in the form of an ensemble of weak learners, typically decision trees. One of the key aspects of gradient boosting is the tuning of hyperparameters, which significantly impact the model's performance and behavior. In this article, we'll delve into the various hyperparameters used in gradient boosting and understand their roles in the training process.

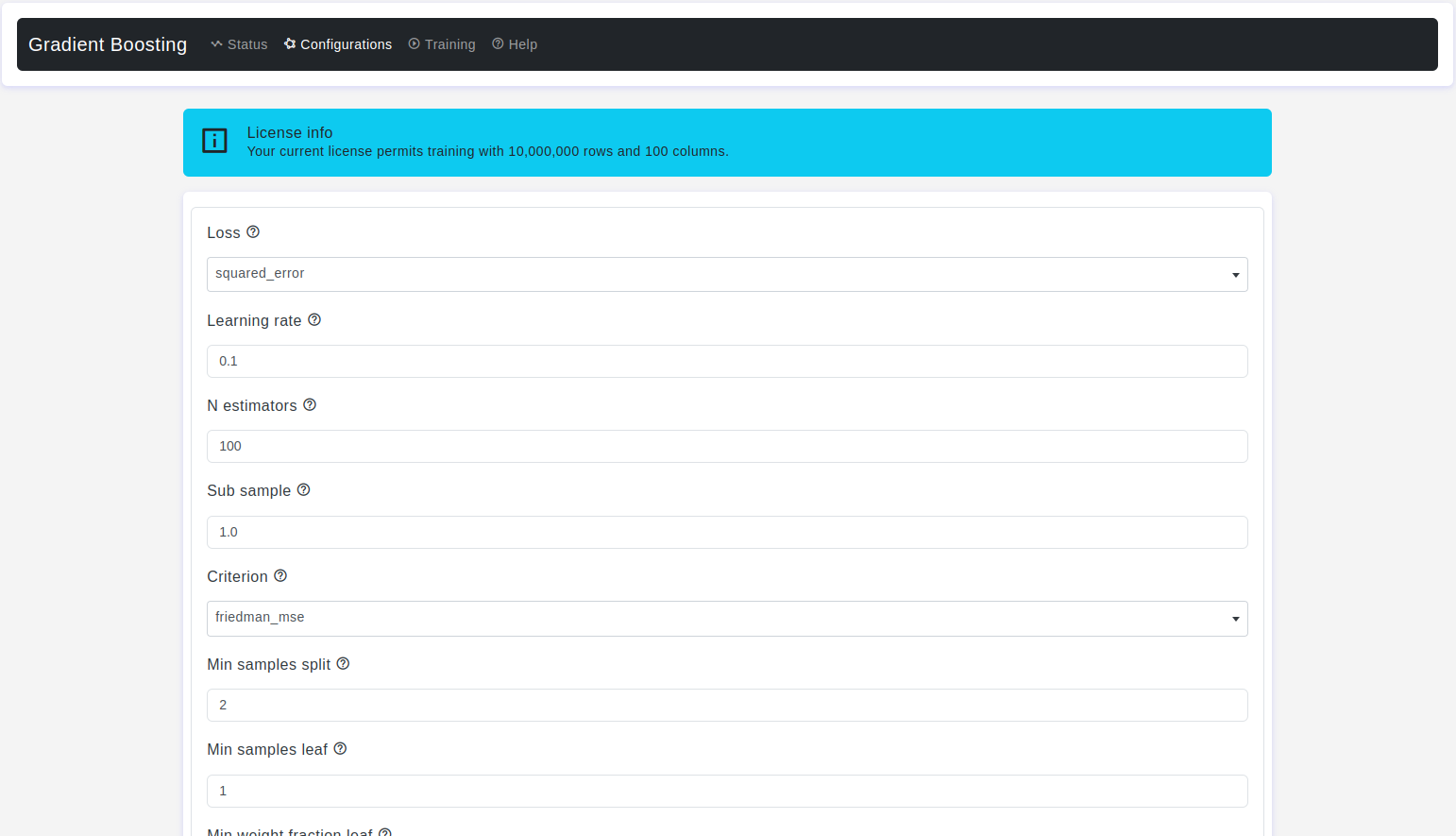

Configurations page:

The Configurations page allows users to adjust various parameters of the lasso regression model. Here are the details:

Loss

- Default Value: squared_error

- Description: The loss function quantifies how well the model's predictions match the actual target values and plays a crucial role in the training process by guiding the algorithm to minimize prediction errors. The choice of the loss function impacts the learning process and the type of regression problem the model is suited for.

- Options:

squared_error: This corresponds to the squared error loss, also known as the least squares loss or mean squared error (MSE). It is used to minimize the squared differences between the model's predictions and the actual target values.absolute_error: This corresponds to the absolute error loss, also known as the least absolute deviations (L1) loss. It minimizes the sum of the absolute differences between predictions and target values. It is robust to outliers compared to squared error loss.huber: Huber loss is a combination of the squared error and absolute error loss. It uses a parameter (typically denoted as alpha) to determine when to switch between the squared error (L2) and absolute error (L1) loss.Huber lossprovides a balance between the smoothness of squared error loss and the robustness of absolute error loss.quantile: This loss function is used for quantile regression. It allows you to specify a quantile level using the alpha parameter (e.g., 0.25 for the 25th percentile) to predict a specific quantile of the target distribution. It is useful when you want to model prediction intervals.

- Options:

Learning rate

- Default Value: 0.1

- Description: It is a hyperparameter that controls the step size or shrinkage applied to each weak learner (typically decision trees) during the gradient boosting process. It determines how much each weak learner's prediction is used to update the ensemble's predictions in the next iteration. The learning rate plays a crucial role in controlling the trade-off between model complexity and model accuracy.

- Warning: Values must be in the range (0.0, inf).

N estimators

- Default Value: 100

- Description: It is a hyperparameter that determines the number of boosting stages or weak learners (typically decision trees) to be used in the ensemble. Each boosting stage contributes incrementally to the final prediction, and increasing the number of estimators can lead to a more powerful and accurate model. However, it also comes with the cost of increased computational complexity.

- Warning: Values must be in the range (1, inf).

Sub sample

- Default Value: 1.0

- Description: It is a hyperparameter that controls the fraction of the training data that is randomly sampled (without replacement) for each boosting iteration. It determines the size of the subset of data used to train each weak learner (typically decision trees) in the ensemble. The subsample parameter can be used to implement a technique called

Stochastic Gradient BoostingorGradient Boosting with subsampling. - Warning: Values must be in the range (0.0, 1.0).

Criterion

- Default Value: friedman_mse

- Description: It is used to specify the criterion for measuring the quality of a split when constructing decision trees, which are the base weak learners used in gradient boosting. Decision trees are used as weak learners within gradient boosting to create an ensemble of trees.

- Options:

friedman_mse: This criterion uses Friedman's mean squared error as the measure of the quality of a split. It is a specific variant of mean squared error tailored for gradient boosting and is often a good choice for regression problems.squared_error(ormse): This criterion uses the traditional mean squared error (MSE) as the measure of the quality of a split. It is based on minimizing the variance of the target variable within each node of the tree and is also suitable for regression problems.

- Options:

Min samples split

- Default Value: 2

- Description: It is a hyperparameter that determines the minimum number of samples required to perform a split at a node when constructing decision trees, which are the base weak learners used in gradient boosting. It controls the minimum size of a node before further splitting it into child nodes.

- Warning: Values must be in the range (2, inf).

Min samples leaf

- Default Value: 1

- Description: It is a hyperparameter that specifies the minimum number of samples (or a fraction of the total number of samples) required to create a leaf node when constructing decision trees, which are the base weak learners used in gradient boosting. It controls the minimum size of the terminal nodes (leaves) in the decision trees.

- Warning: Values must be in the range (1, inf).

Min weight fraction leaf

- Default Value: 0.0

- Description: It is a hyperparameter that allows you to specify the minimum weighted fraction of the total sum of instance weights (sample weights) required to create a leaf node when constructing decision trees. This parameter provides a way to control the minimum node size based on the weighted number of samples rather than the raw count of samples.

- Warning: Values must be in the range (0.0, 0.5).

Max depth

- Default Value: 3

- Description: It is a hyperparameter that determines the maximum depth of the individual decision trees (base learners) in the gradient-boosting ensemble. It controls the depth or complexity of the decision trees, which are used as weak learners.

- Warning: Values must be in the range (1, inf).

Min impurity decrease

- Default Value: 0.0

- Description: It is a hyperparameter that determines the minimum amount by which the impurity (or impurity reduction) must decrease for a node to be eligible for splitting when constructing decision trees, which are used as base learners in gradient boosting.

- Warning: Values must be in the range (0.0, inf).

Init

- Default Value: None

- Description: It is an optional hyperparameter that allows you to specify the initial predictions for the ensemble before training begins. It can be used to provide an initial guess or approximation for the target values before the boosting process refines these predictions.

Random state

- Default Value: None

- Description: It is used to control the randomness of the algorithm, particularly regarding the initial state and random sampling during the training process. It is a crucial parameter for ensuring the reproducibility of your results and for controlling the randomness in your gradient boosting model.

Max features

- Default Value: None

- Description: It is a hyperparameter that controls the number of features (or variables) to consider when making splits during the construction of individual decision trees, which are used as base learners in gradient boosting. It can be used to limit the subset of features considered at each split point.

- Warning: Values must be in the range (1, inf).

Alpha

- Default Value: 0.9

- Description: It is a hyperparameter that controls the regularization of the regression model. Specifically, it determines the amount of L1 (Lasso) regularization applied to the individual base learners (decision trees) used in the gradient boosting ensemble.

- Warning: Values must be in the range (0.1, 0.9).

Verbose

- Default Value: 0

- Description: It is used to control the amount of information or output that the algorithm provides during the training process. It determines whether or not the algorithm displays progress updates and diagnostic information as it builds the gradient boosting ensemble.

- Options:

verbose=0: No progress updates or diagnostic information are displayed during training. This is the quietest setting, suitable for cases where you don't need to monitor the training progress.verbose=1: Provides progress updates at each boosting iteration, displaying information about the current stage of training. This can be useful for monitoring the training process.verbose=2: Provides more detailed information during training, including the individual decision trees' construction. This level of verbosity is helpful for debugging and gaining insight into the model's behavior.Higher integer values: Increasing the value of verbose beyond 2 may provide even more detailed information, depending on the specific implementation of the algorithm.

- Options:

Max leaf nodes

- Default Value: None

- Description: It is a hyperparameter that sets an upper limit on the maximum number of leaf nodes in each individual decision tree (base learner) within the gradient boosting ensemble. It can be used to control the tree's depth indirectly by limiting the number of terminal nodes.

- Warning: Values must be in the range (2, inf), If None, then

unlimitednumber of leaf nodes.

Warm start

- Default Value: False

- Description: It is a boolean parameter that determines whether to reuse the solution from the previous call to the fit method as the initial starting point for training when you call fit again. It is used to continue training a gradient boosting ensemble with additional boosting iterations without restarting from scratch.

Validation fraction

- Default Value: 0.1

- Description: It is used for early stopping during the training process. It determines the proportion of the training data to set aside as a validation set to monitor the model's performance. Early stopping is a technique used to prevent overfitting by monitoring the model's performance on the validation set and stopping training when the performance stops improving.

- Warning: Values must be in the range (0.0, 1.0).

N iter no change

- Default Value: None

- Description: It is used in conjunction with early stopping to control when the training process should stop if there is no improvement in the model's performance on the validation set. It is particularly useful for preventing overfitting and efficiently training gradient-boosting models.

- Warning: Values must be in the range (1, inf).

Tol

- Default Value: 1e-4

- Description: It is a parameter (short for tolerance) that specifies the stopping criterion for the gradient boosting training process. Specifically, it controls when the training stops if the improvement in the chosen loss metric (e.g., mean squared error) becomes smaller than the specified tolerance. Additionally, if you set the

N iter no changeparameter to a positive integer value, training will also stop if there is no improvement in the loss metric for the specified number of consecutive iterations. - Warning: Values must be in the range (0.0, inf).

CCP alpha

- Default Value: 0.0

- Description: Complexity parameter used for Minimal Cost-Complexity Pruning. The subtree with the largest cost complexity that is smaller than

ccp_alphawill be chosen. - Warning: Values must be in the range (0.0, inf) and non-negative.

Test size

- Default Value: 0.2

- Description: The

test sizeparameter is used when splitting the dataset into these subsets, and it specifies the portion of the data that will be used for testing.

Train size

- Default Value: 0.8

- Description: The

train sizeparameter is used when splitting the dataset into these subsets, and it specifies the portion of the data that will be used for model training.

Conclusion

Hyperparameter tuning is a crucial aspect of gradient boosting, influencing model performance, complexity, and generalization. Understanding the roles and effects of these parameters is essential for effectively training and deploying gradient boosting models in various regression tasks.