Overview:

In the realm of machine learning, K-Nearest Neighbors (KNN) classification is a powerful yet intuitive algorithm widely used for various predictive tasks. OtasML enhances the usability of KNN by offering a visual interface to configure and optimize this algorithm effectively. This article delves into the specifics of KNN classification within OtasML, detailing how different configuration parameters can be adjusted for optimal performance.

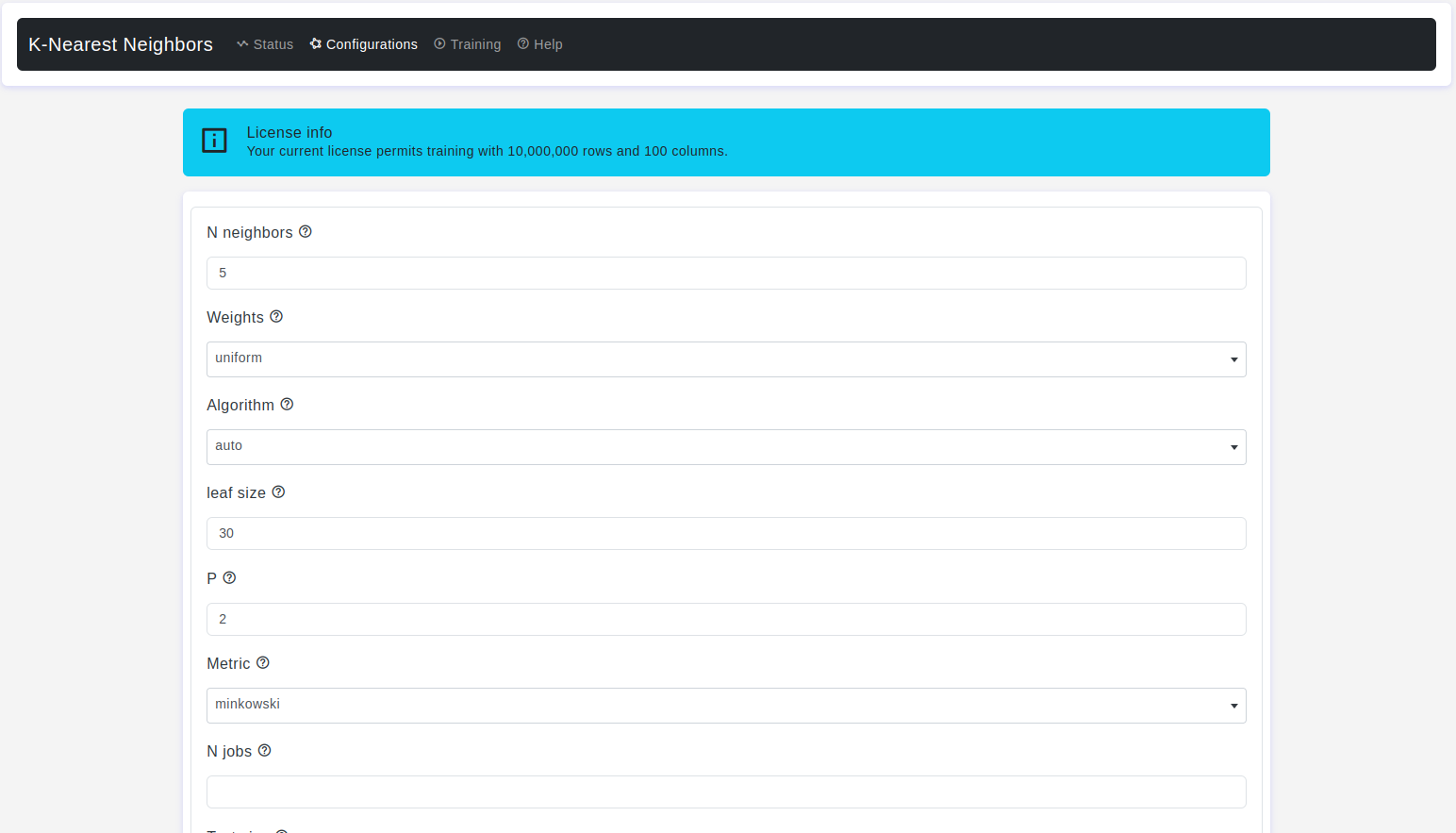

Configurations page:

The Configurations page is where users can fine-tune the settings of the K-Nearest Neighbors classifier. Let’s explore these parameters in detail:

N Neighbors

- Default Value: 5

- Description: Determines the number of nearest neighbors to consider when making a prediction for a new data point. A higher value may smooth out decision boundaries, while a lower value can capture more granular patterns.

Weights

- Default Value: Uniform

- Description: The weights parameter allows you to control how the contributions of the nearest neighbors are weighted when making predictions for a new data point.

UniformAll neighbors are weighted equally, meaning each neighbor’s vote contributes equally to the classification decision.DistanceThe weight of each neighbor's vote is proportional to the inverse of its distance to the new data point, giving closer neighbors a stronger influence.

Algorithm

- Default Value: Auto

- Description: The algorithm parameter allows you to choose the algorithm used to compute nearest neighbors when making predictions for new data points.

autoThis is the default setting. The algorithm automatically selects the most appropriate algorithm based on the input data. For dense data, it uses thebrutealgorithm, and for sparse data, it uses thekd_treeorball_treealgorithm.bruteThis algorithm performs a brute-force search through all training points to find the nearest neighbors. It is suitable for small datasets but can be slow for large datasets.kd_treeThis algorithm constructs a KD-tree to organize the training data and facilitate fast nearest neighbor searches. It is efficient for datasets with moderate dimensions and sizes.ball_treeThis algorithm constructs a ball tree to organize the training data, which is particularly efficient for datasets with higher dimensions.

- Warning:

ball_treewill use BallTree.kd_treewill use KDTree.brutewill use a brute-force search.

Leaf Size

- Default Value: 30

- Description: It is used to set the number of points at which the algorithm switches to a

brute-forcesearch method when using thekd_treeorball_treealgorithm for nearest neighbor search. At some point, when the number of points in a leaf node reaches the specifiedleaf_size, the algorithm switches to abrute-forceapproach, searching through all the points in that leaf.

P

- Default Value: 2

- Description: This parameter is used to control the power parameter for the Minkowski metric, which is the distance metric used in the k-nearest neighbors algorithm.

When p = 1It is equivalent to using the Manhattan distance (L1 distance).When p = 2It is equivalent to using the Euclidean distance (L2 distance).For arbitrary values of pthe Minkowski distance with that specific power is used.

Metric

- Default Value: Minkowski

- Description: It allows you to specify the distance metric used to measure the distance between data points when performing nearest neighbor search.

Euclidean:The default distance metric, also known as L2 distance or Euclidean distance.Manhattan:Also known as L1 distance or Manhattan distance.Minkowski:The general form of distance, where you can specify the power parameter p (usually 2 for Euclidean and 1 for Manhattan).Cityblock (Manhattan):Also known as the L1 distance or Manhattan distance, this metric calculates the absolute differences between the coordinates of two points along each dimension and sums them up. It represents the shortest path you would take when moving between two points in a city where the streets are laid out in a grid.Cosine:The cosine distance metric calculates the cosine of the angle between two vectors. It's commonly used in text mining and recommendation systems to measure the similarity between two vectors by considering their orientation rather than magnitude.Haversine:The haversine distance is used for measuring distances between two points on the surface of a sphere. It's particularly useful for geographical coordinates, such as latitude and longitude, and takes into account the curvature of the Earth.L1:Also known as the Manhattan distance or city block distance, L1 distance calculates the sum of the absolute differences between the coordinates of two points along each dimension. It's named after the length of the paths you would take when moving in a city grid.L2 (Euclidean):The L2 distance, also known as the Euclidean distance, calculates the straight-line distance between two points in a multidimensional space. It's the most common and intuitive distance metric, representing the length of the shortest path between the two points.Nan_euclidean:This might refer to a variation of the Euclidean distance that handles missing values (NaNs). While the standard Euclidean distance is not defined when one or both points have missing values, this variation might implement a way to handle missing values in distance calculations.

N Jobs

- Default Value: None

- Description: Refers to the number of CPU cores or parallel processes utilized for computations. Parallelism can significantly speed up computationally intensive tasks. Setting

n_jobs = -1uses all processors. - Warning:

-1means using all processors.

Test Size

- Default Value: 0.2

- Description: The

test sizeparameter is used when splitting the dataset into these subsets, and it specifies the portion of the data that will be used for testing.

Train Size

- Default Value: 0.8

- Description: The

train sizeparameter is used when splitting the dataset into these subsets, and it specifies the portion of the data that will be used for model training.

Conclusion

OtasML simplifies the configuration and optimization of the K-Nearest Neighbors classifier. By understanding and utilizing the detailed configurations available, users can fine-tune their models to achieve optimal performance on their datasets. Whether adjusting the number of neighbors, selecting the appropriate algorithm, or fine-tuning distance metrics, OtasML provides the tools needed to build accurate and reliable KNN models. Explore OtasML today and elevate your data science projects with visual machine learning.